What is the A2A Protocol?

What is the A2A Protocol?

The A2A Protocol is an open standard that enables AI agents to communicate and collaborate seamlessly. It provides a common language for agents built with different frameworks and by different vendors, promoting interoperability and breaking down silos. Agents are autonomous problem-solvers that act independently within their environments. A2A lets agents built by different developers, on different frameworks, and across different organizations work together as peers.

Why use A2A?

A2A addresses key challenges in multi-agent collaboration by providing a standardized way for agents to interact. This section explains what A2A solves and the benefits it provides.

Problems A2A solves

Consider a user asking an AI assistant to plan an international trip. This involves coordinating multiple specialist agents, such as:

- A flight booking agent

- A hotel booking agent

- A local travel recommendation agent

- A currency conversion agent

Without A2A, integrating these agents typically runs into several issues:

Agent exposure problem: Developers often wrap agents as “tools” to expose them to other agents, similar to exposing tools in a multi-agent control plane (Model Context Protocol). This is inefficient because agents are designed to negotiate directly. Wrapping agents as tools constrains their capabilities. A2A allows agents to be exposed as agents, without this wrapper.

Custom integrations: Each interaction requires bespoke point-to-point integration, creating heavy engineering overhead.

Slow innovation: Custom development for every new integration slows down iteration and ecosystem growth.

Scalability issues: As the number of agents and interactions grows, systems become difficult to scale and maintain.

Limited interoperability: Ad-hoc integrations prevent an organic, composable agent ecosystem from forming.

Security gaps: One-off communication often lacks consistent security practices.

A2A solves these challenges by establishing reliable, secure interoperability between AI agents.

Example scenario

This section illustrates how A2A (Agent2Agent) enables complex interactions among AI agents.

A complex user request

A user interacts with an AI assistant and gives a complex prompt like “plan an international trip”.

graph LR

User[User] --> Prompt[Prompt] --> AI_Assistant[AI Assistant]

The need for collaboration

The assistant realizes it must coordinate multiple specialist agents: flight booking, hotel booking, currency conversion, and local travel agents.

graph LR

subgraph "Specialist Agents"

FBA[✈️ Flight Booking Agent]

HRA[🏨 Hotel Booking Agent]

CCA[💱 Currency Conversion Agent]

LTA[🚌 Local Travel Agent]

end

AI_Assistant[🤖 AI Assistant] --> FBA

AI_Assistant --> HRA

AI_Assistant --> CCA

AI_Assistant --> LTA

Interoperability challenge

Core issue: these agents cannot work together because each is developed and deployed independently.

Without a standard protocol, these agents not only fail to collaborate—they also cannot reliably discover what the others can do. Each agent (flight, hotel, currency, travel) is isolated.

The “Use A2A” solution

A2A provides standard methods and data structures that allow agents to communicate regardless of their underlying implementation. This enables the same agents to compose into interconnected systems via a standard protocol.

The assistant becomes the coordinator, receiving consolidated information from all A2A-enabled agents and presenting a single complete travel plan as a seamless response to the user’s original prompt.

Core benefits of A2A

Adopting A2A provides significant benefits across the agent ecosystem:

Secure collaboration: Without a standard, it’s hard to ensure secure agent-to-agent communication. A2A uses HTTPS and supports opaque operations so agents don’t need to reveal their internal workings while collaborating.

Interoperability: A2A breaks down silos across agent ecosystems, enabling agents from different vendors and frameworks to work together.

Agent autonomy: A2A lets agents remain autonomous, acting as independent entities while collaborating with others.

Reduced integration complexity: Standardized communication allows teams to focus on the unique value of their agents rather than bespoke glue code.

Support for long-running operations: A2A supports long-running operations (LRO) and streaming via Server-Sent Events (SSE) and async execution.

Key design principles

A2A is designed with broad adoption, enterprise capabilities, and future-proofing in mind:

Simplicity: A2A builds on existing standards like HTTP, JSON-RPC, and SSE. This avoids reinventing core technology and accelerates adoption.

Enterprise-ready: A2A aligns with standard web practices for authentication, authorization, security, privacy, tracing, and monitoring.

Async-first: A2A natively supports long-running tasks and scenarios where agents/users may not maintain continuous connections, via streaming and push notifications.

Modality-agnostic: Agents can communicate using multiple content types, enabling richer interactions beyond plain text.

Opaque execution: Agents can collaborate without exposing internal logic, memory, or proprietary tools. Interactions rely on declared capabilities and exchanged context, protecting IP and improving security.

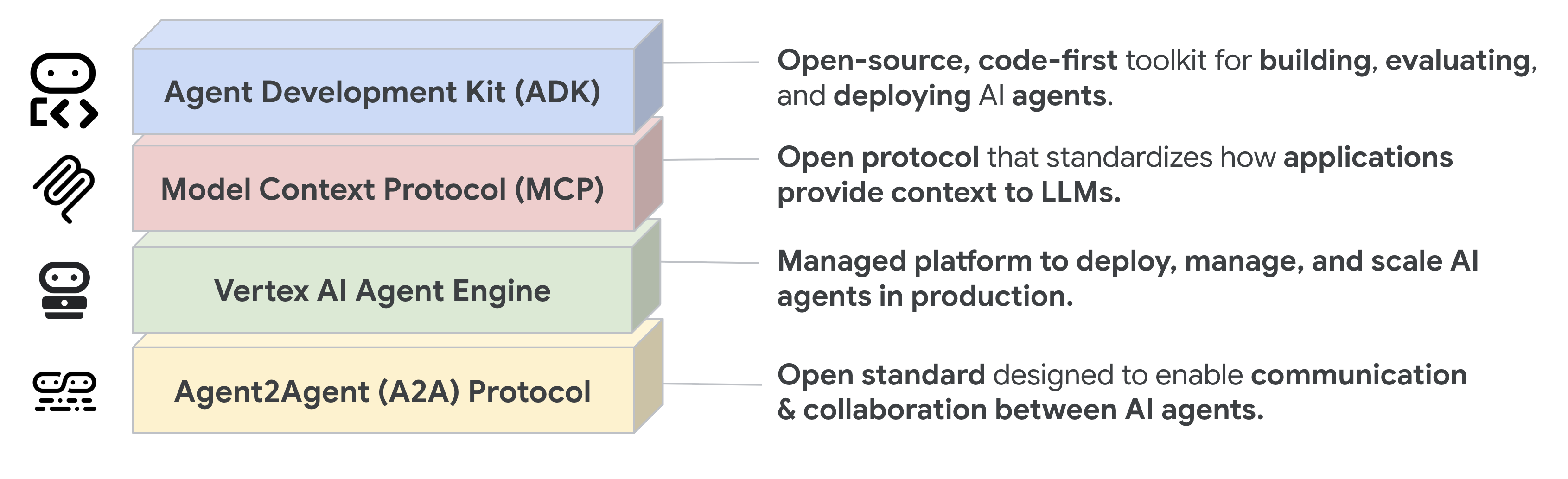

Understanding the agent stack: A2A, MCP, frameworks, and models

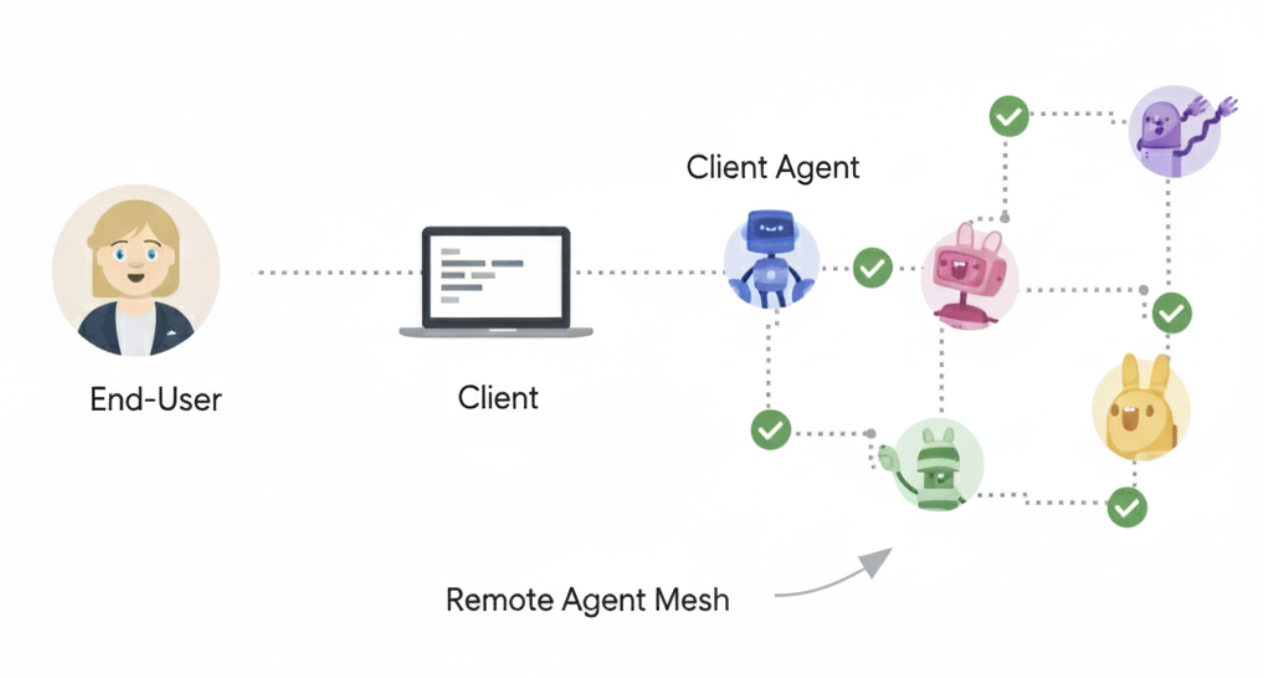

A2A sits within a broader agent stack:

- A2A: Standardizes communication among agents deployed across organizations and built with different frameworks

- MCP: Connects models to data and external resources

- Frameworks (e.g., ADK): Toolkits for building agents

- Models: The reasoning foundation for agents, which can be any LLM

A2A and MCP

In the broader ecosystem of AI communication protocols, you may be familiar with standards intended to facilitate interactions between agents, models, and tools. The Model Context Protocol (MCP) is an emerging standard focused on connecting LLMs to data and external resources.

The Agent2Agent (A2A) protocol standardizes communication between AI agents—especially agents deployed in external systems. A2A is positioned as complementary to MCP, addressing a different (but related) part of the problem.

MCP focus: Reduce the complexity of connecting agents to tools and data. Tools are typically stateless and perform specific predefined functions (e.g., calculators, database queries).

A2A focus: Enable agents to collaborate in their native modality—communicating as agents (or users), rather than being constrained to tool-like interactions. This enables complex multi-turn interactions where agents reason, plan, and delegate tasks to other agents (including negotiation and clarification steps).

Wrapping agents as simple tools is inherently limited because it cannot capture the full capability of an agent. This key distinction is discussed in detail in “Agents are not tools”.

For a deeper comparison, see the A2A vs MCP document.

A2A and ADK

Agent Development Kit (ADK) is an open-source agent development toolkit developed by Google. A2A is the communication protocol for agents, enabling agent-to-agent communication regardless of the framework used to build them (e.g., ADK, LangGraph, or CrewAI). ADK is a flexible and modular framework for building and deploying agents. While optimized for Gemini and the Google ecosystem, ADK is model-agnostic, deployment-agnostic, and built for compatibility with other frameworks.

A2A request lifecycle

An A2A request follows four main steps: agent discovery, authentication, the sendMessage API, and the sendMessageStream API. The following diagram shows how clients, A2A servers, and auth servers interact.

sequenceDiagram

participant Client as Client

participant A2A_Server as A2A Server

participant Auth_Server as Auth Server

rect rgb(240, 240, 240)

Note over Client, A2A_Server: 1. Agent discovery

Client->>A2A_Server: GET AgentCard (/.well-known/agent-card)

A2A_Server-->>Client: Return AgentCard

end

rect rgb(240, 240, 240)

Note over Client, Auth_Server: 2. Authentication

Client->>Client: Parse AgentCard to get security scheme

alt Security scheme is \"openIdConnect\"

Client->>Auth_Server: Request token via \"authorizationUrl\" and \"tokenUrl\"

Auth_Server-->>Client: Return JWT

end

end

rect rgb(240, 240, 240)

Note over Client, A2A_Server: 3. sendMessage API

Client->>Client: Parse AgentCard and send request to \"url\"

Client->>A2A_Server: POST /sendMessage (with JWT)

A2A_Server->>A2A_Server: Process message and create task

A2A_Server-->>Client: Return task response

end

rect rgb(240, 240, 240)

Note over Client, A2A_Server: 4. sendMessageStream API

Client->>A2A_Server: POST /sendMessageStream (with JWT)

A2A_Server-->>Client: Stream: Task (submitted)

A2A_Server-->>Client: Stream: Task status update (working)

A2A_Server-->>Client: Stream: Task artifact update (Artifact A)

A2A_Server-->>Client: Stream: Task artifact update (Artifact B)

A2A_Server-->>Client: Stream: Task status update (completed)

end

Next steps

Learn the key concepts that form the foundation of the A2A protocol.